If you encounter an instance where the Apollo bot is providing incorrect information in a chat, follow these steps to identify the issue and implement a solution.

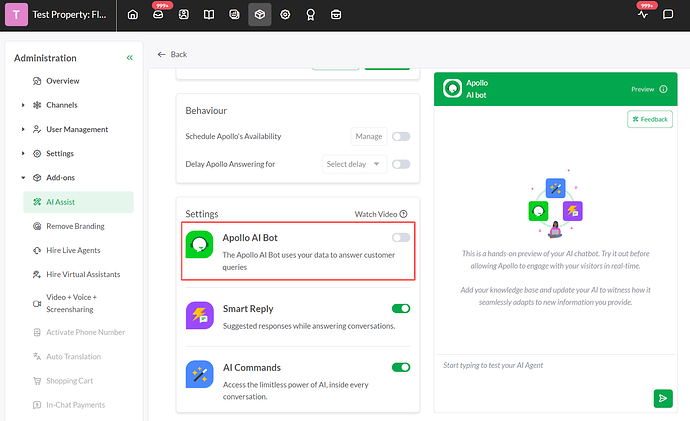

Step 1: (Optional) Disable Apollo Bot Temporarily

If you want to ensure that no more incorrect answers are given during the troubleshooting process, you may choose to disable Apollo from accepting chats. This can be done from the add-on settings.

Once toggled off, Apollo will not attempt to join incoming chats, but you can still utilize the Preview tool for troubleshooting.

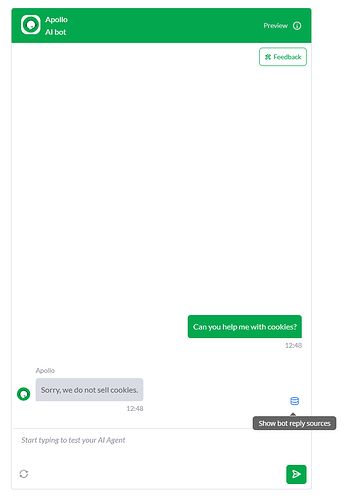

Step 2: Replicate the Question in Preview Mode

Next, replicate the question in Preview mode to obtain the incorrect answer. Sometimes you can copy directly what the visitor sent. However, if the error occurred mid-conversation, you might need to re-create it message by message to understand exactly what went wrong.

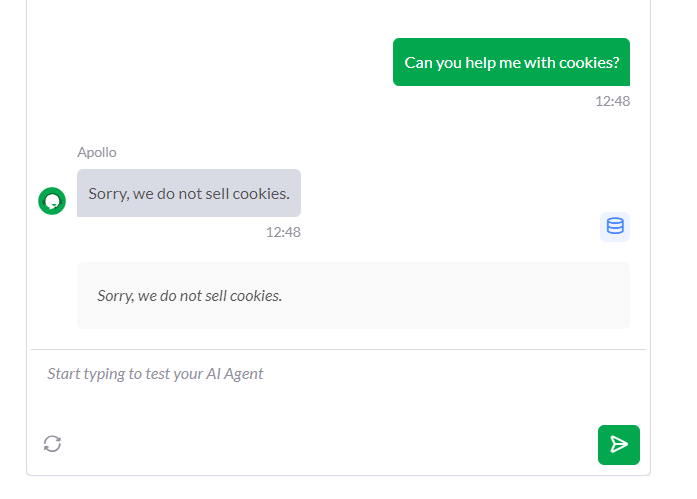

Step 3: Evaluate the Data Source

Open the Data Source for the incorrect answer and evaluate the sources that were found for that inquiry. Carefully inspect the information to determine what might have led Apollo to the wrong conclusion.

If you do not see the Data Source icon, make sure that the relevant Data Source is enabled and Knowledgebase Articles are not set to Private.

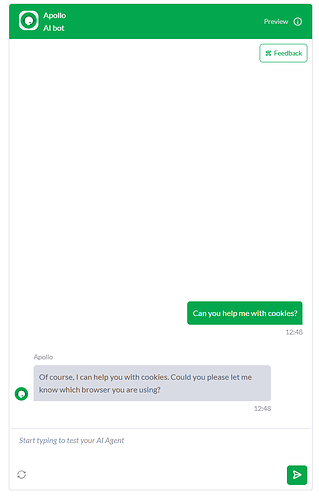

In an example case, we want Apollo to be helpful support agent and help visitors clear their browser cookies, but when asked ‘’Can you help me with cookies?’’ it does not do it -

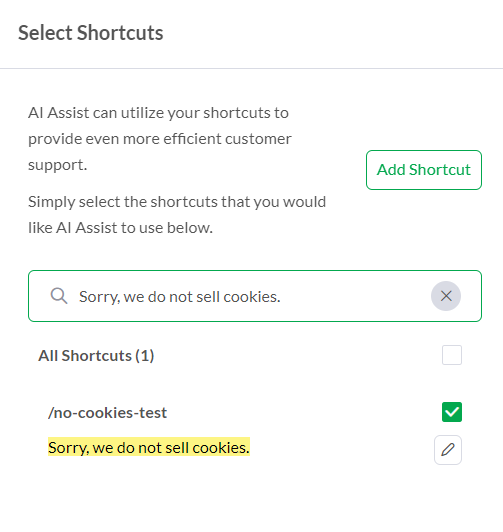

Here upon expanding the Data Source we see that there is ‘’Sorry, we do not sell cookies’’ listed which comes from Shortcuts.

Once the Shortcut that caused confusion is unselected, we get the answer we wanted:

Common Reasons for Confusing Data Source

Understanding common reasons why the Apollo bot may be confused can help in addressing the issue. These reasons include:

Too much unedited information in Data Source: It’s vital to review and edit Knowledge Base (KB) Articles and data fetched by website ingest to ensure the text is structured in a way that’s comprehensible for AI.

Ambiguous Queries: When user’s question is ambiguous or lacks specific details, Apollo may struggle to find the correct answer. Phrasing the Data Source materials in a way that conveys the general idea rather than answer to a specifically worded question can help with this.

Outdated Information: If the Data Source contains old or outdated information, Apollo may provide answers that are no longer relevant. Regularly updating your data sources is essential.

Mismatched Context or Terms: Sometimes, specific terms or context can be misunderstood by the AI, especially if product or service offering names contain generic industry terms. Ensuring that different products or services offered are clearly layed out in provided materials is crucial.

Next Steps

From this point, resolving the problem is often down to a case-by-case basis. Depending on the reason identified, you may need to edit the content, update the information, clarify ambiguities, or adjust your approach to the question.

If you continue to face issues or need further assistance, please don’t hesitate to reach out to us for support.